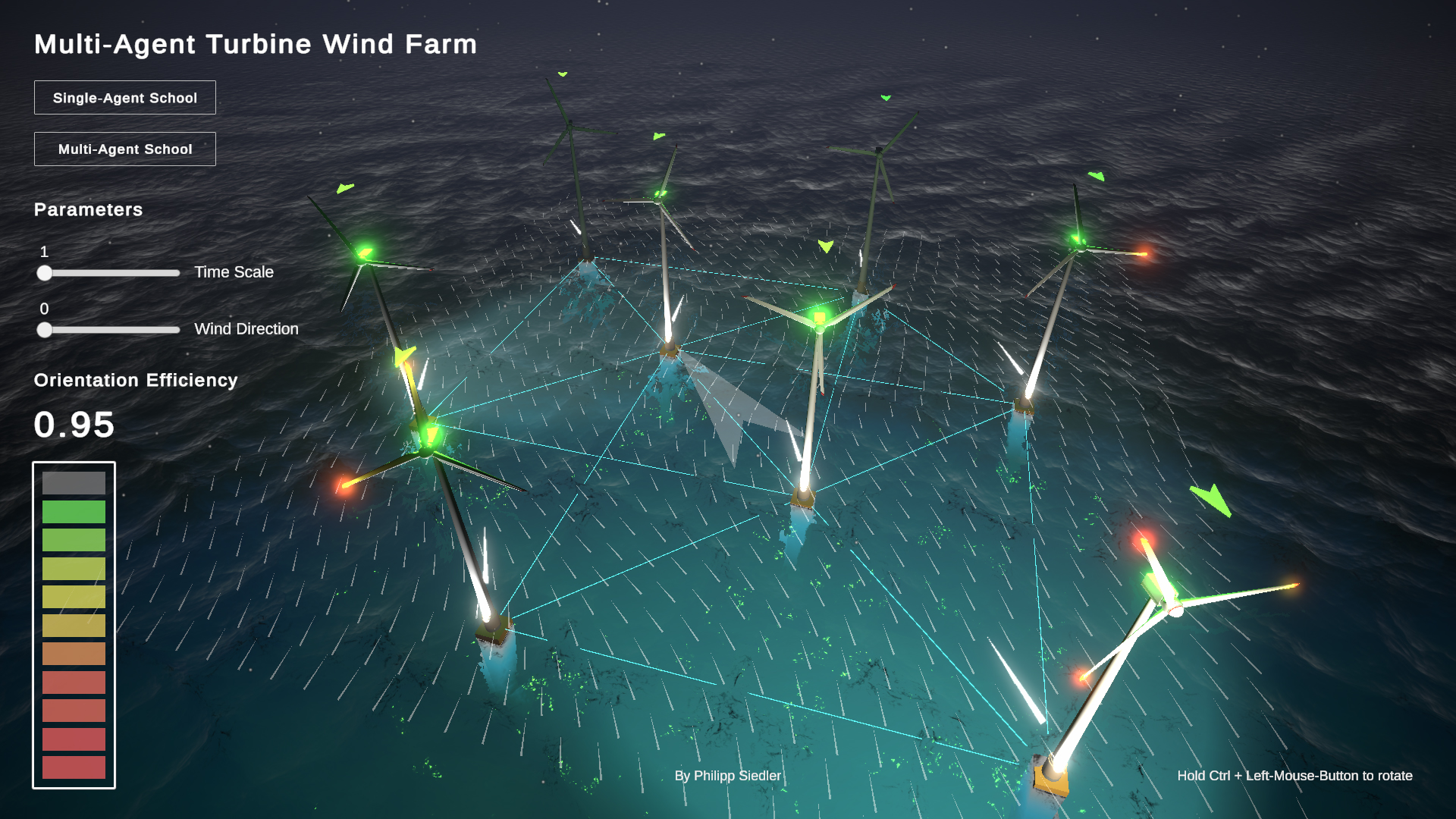

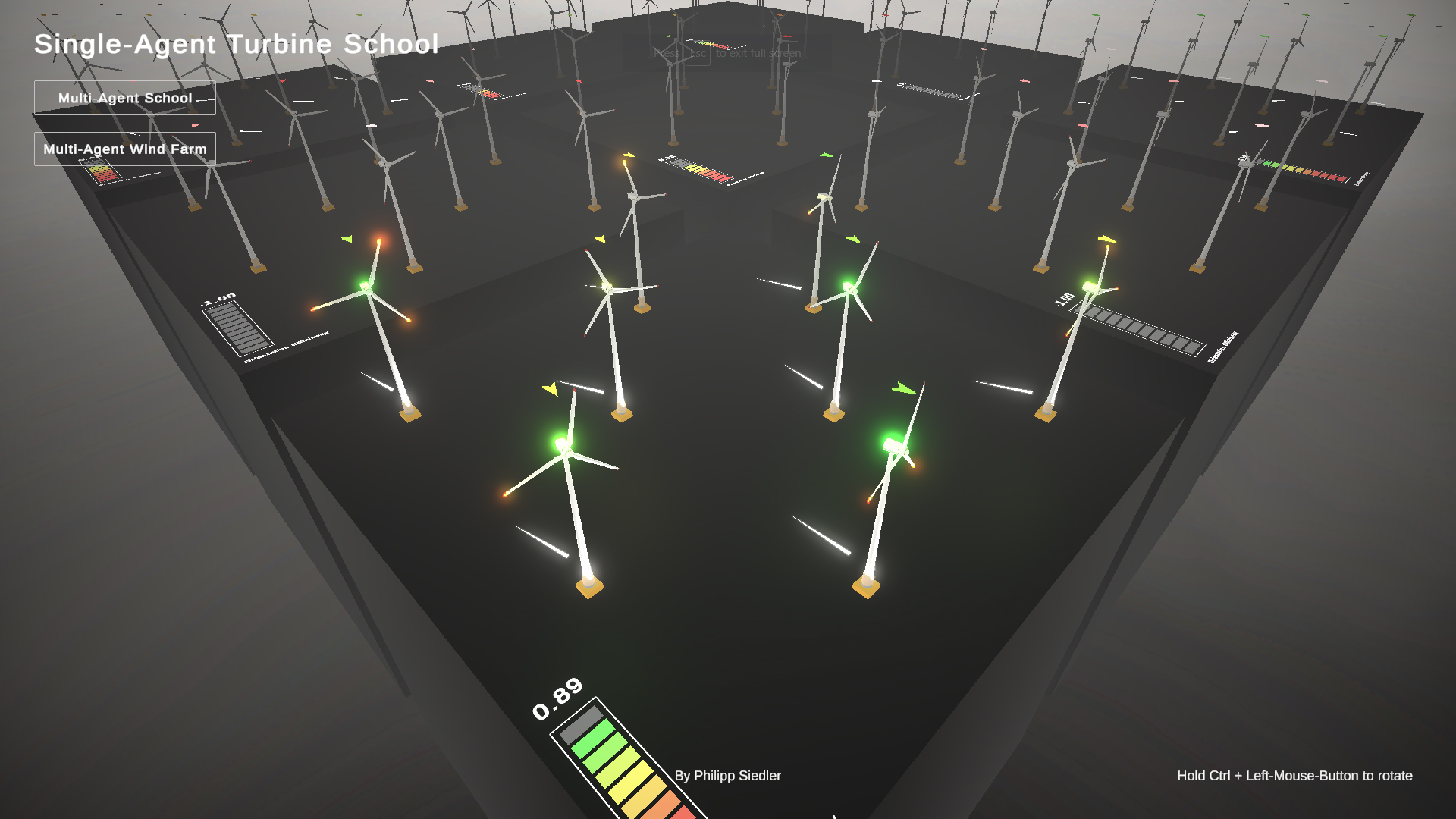

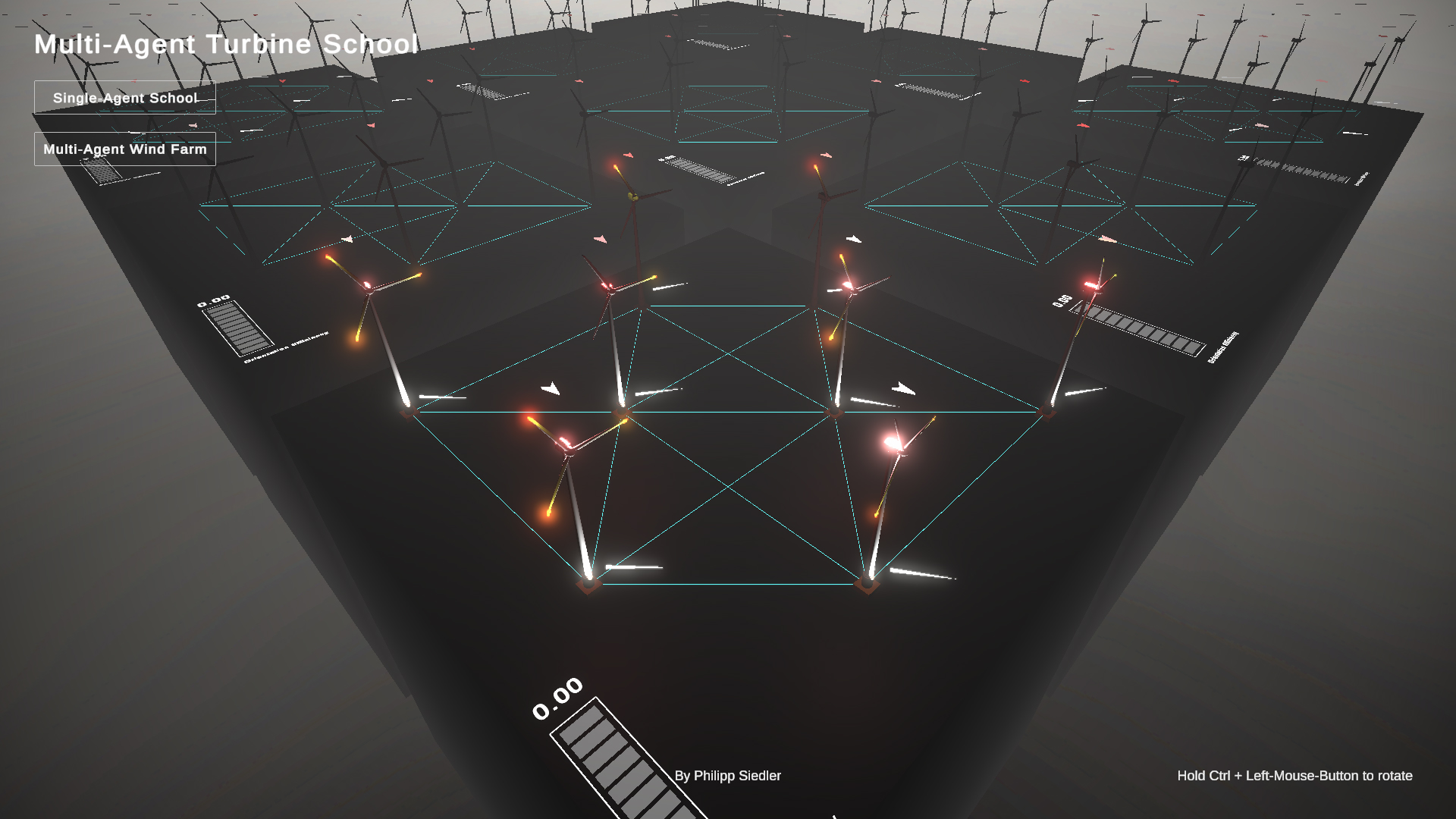

Multi-Agent Collaboration has been a developing interest of mine. Especially how agents could potentially communicate with each other. Could they develop their own language? Does communication has a cost? And can collaboration and communication of decentralized agents surpas traditional reinforcement learning agent environment setups? Those are questions I started asking myself and so this has been developed from a initial sketch to explore those questions. As a proof of concept, I have setup an experiment with two competing agent environments. One with a single agent controlling all turbines in a wind farm and the other with each turbine being an agent, making their own decisions. The collective of agents can communicate and share data with a set number of neighbours, share wind directions and learn to predict change locally, in order to optimize orientation and therefor energy generated by the wind farm.

I have been inspired by the real world problem solved by DeepMind. Reducing energy used to cool data-centres by 40%: https://deepmind.com/blog/article/deepmind-ai-reduces-google-data-centre-cooling-bill-40

Following that, I thought about optimizing the generation of energy using RL. This sketch can also be explored as a WebApp here: https://philippds-pages.github.io/RL-Wind-Farm_WebApp/

Offshore Wind Turbine Farm

Multi-Agent Collaboration

Agent & Environment Setup:

Goal: Maximize orientation efficiency. A turbine is optimally oriented against the wind.

Agents: The environment contains one agent.

Agent Reward Function: -0.0 to -1.0 negative reward for rotation angle with wind direction (270 to 90 degree). +0.1 to +1.0 positive reward for rotation angle against wind direction (90 to 270 degree).

Behavior Parameters:

Single Agent Observation space:

Agent Vector Observations: 72

Total Vector Observations per Wind Farm: 72

For all turbines in farm:

Turbine direction vector

Turbine location vector

Wind direction vector at turbine

Multi Agent Observation space:

Agent Vector Observations: 27

Total Vector Observations per Wind Farm: 216 Individual Agent:

Turbine direction vector

Turbine location vector

Wind direction vector at turbine

For all neighbours:

Turbine location vector

Wind direction vector at turbine

Actions: 3 discrete actions: rotate left, do nothing, rotate right.

Benchmark Max Reward: 2000

Hyperparameters:

Single-Agent

Multi-Agent

Single-Agent Training: Max. 100k Step Count

Multi-Agent Collaboration Training: Max. 100k Step Count

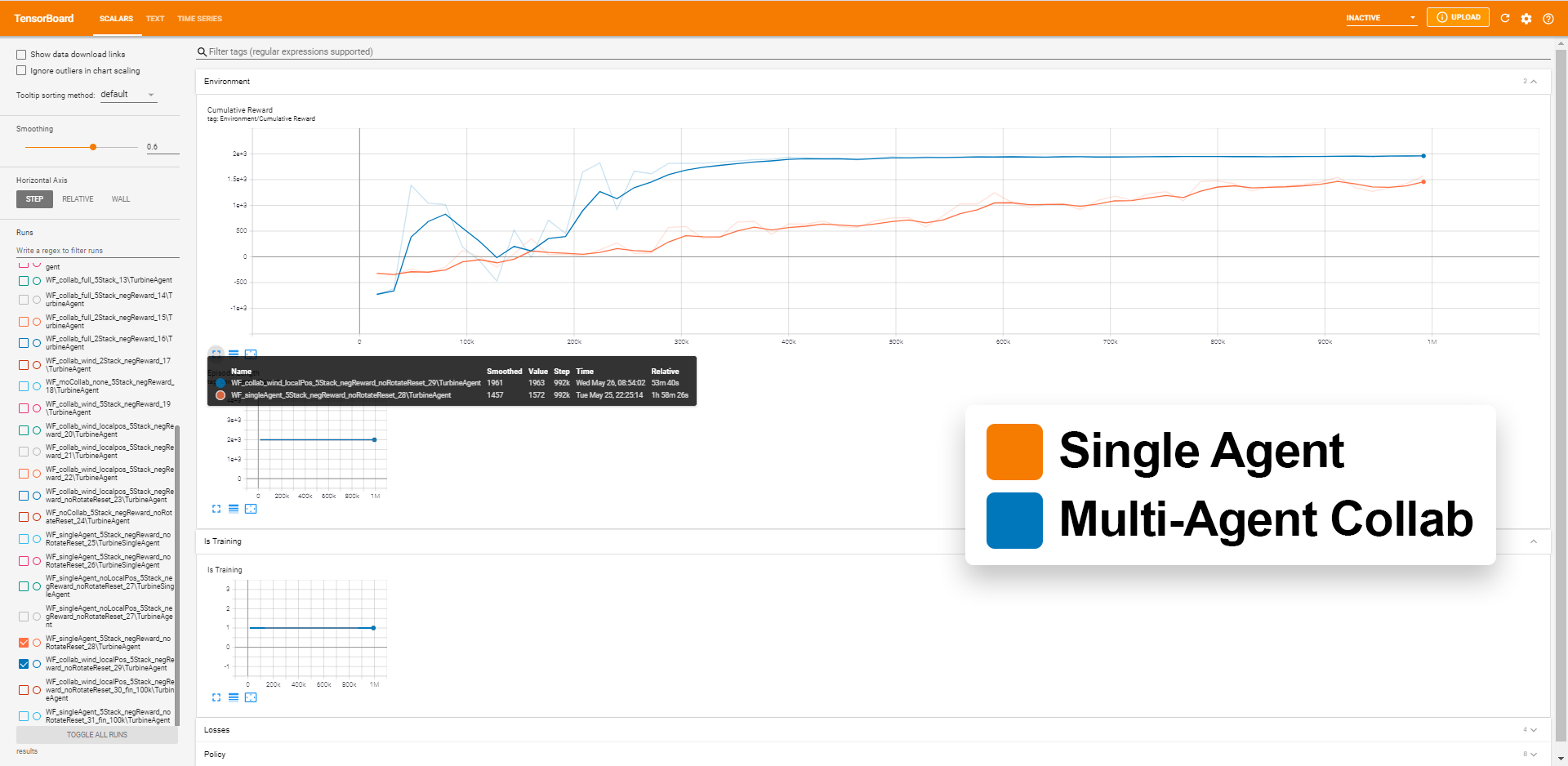

Tensorboard Results: Max. 1mil Step Count

Single Agent: 1h 58m 26s - Mean Cumulative Reward: 1572

Multi-Agent Collab: 53m 40s - Mean Cumulative Reward: 1963